Is Bun Another New Hot Thing?

The hype around Bun is gradually subsiding, as is usually the case with new frameworks and technologies. I decided to delve into why it generated such excitement and whether it’s worth investing time in its installation and use.

Bun

On September 8, 2023, the production-ready version of Bun 1.0 was released, causing a bit of a stir in the frontend development world. Many saw this as a revolution in the world of bundlers. So, what exactly is Bun?

As stated on the official website, “Bun is an all-in-one toolkit.” This tool is written in Rust and can be used in the following roles:

- Node.js: Bun replaces Node.js and eliminates the need for tools such as node, npx, dotenv, nodemon, ws, and node-fetch.

- Transpiler: You no longer need transpilers like tsc, ts-node for TypeScript, or babel, @babel/preset-*. Bun automatically transpiles files with extensions .js, .ts, .cjs, .mjs, .jsx, and .tsx.

- Bundler: Previously used bundlers like esbuild, webpack, parcel, or rollup are no longer required.

- Package Manager: Bun is compatible with npm and can read your package.json file and install dependencies in node_modules, similar to other package managers like npm, pnpm, or yarn.

- Testing Library: Bun also provides a test runner compatible with Jest, supporting snapshots, mocking, and code coverage. This can replace other libraries like Jest, ts-jest, jest-extended, or vitest.

Wow, it sounds impressive, and I can’t wait to try Bun on my own project.

Trying Bun on a Real Project

I have a small project with around 9,000 lines of code - it’s the backend of my dictionary, Dictidex, which will be released soon. According to pnpm, the project has 633 dependencies:

> pnpm install

Lockfile is up to date, resolution step is skipped

Packages: +633The project is written using Express.js and includes several services for working with the dictionary and third-party APIs. It’s entirely written in TypeScript, uses the pnpm package manager, vitest for testing, ts-node for transpilation, and nodemon for development.

Testing Environment

- MacBook Pro 16 M1 Pro, 16Gb

- pnpm 8.7.5

- bun 1.0.0

It’s important to note that these tests do not claim to be highly accurate. My goal is to demonstrate the expectations of an ordinary user.

Testing

Cold Start - pnpm

Before starting the installation, we clear the entire cache:

pnpm store path | xargs rm -rfThen we proceed with the installation:

> time pnpm install

Lockfile is up to date, resolution step is skipped

Packages: +633

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Progress: resolved 633, reused 0, downloaded 633, added 633, done

dependencies:

+ @prisma/client 5.1.0

+ axios 1.4.0

+ cors 2.8.5

...

Done in 14.3s

pnpm install 5.98s user 7.59s system 94% cpu 14.404 totalThe installation time was 14.4 seconds. For comparison, on the same project using npm, the installation would have taken 57.3 seconds:

> time npm install

added 676 packages, and audited 677 packages in 57s

161 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

npm install 10.99s user 4.25s system 26% cpu 57.366 totalThis is nearly 4 times slower than pnpm!

Cold Start - bun

Similarly, before starting the installation, we clear the cache:

bun pm cache | xargs rm -rfThen we proceed with the installation:

❯ time bun install

[0.05ms] ".env"

bun install v1.0.0 (822a00c4)

+ @tsconfig/node18@18.2.2

+ @types/cors@2.8.14

+ @types/express@4.17.18

...

639 packages installed [20.49s]

bun install 1.77s user 3.47s system 25% cpu 20.510 totalSurprisingly, during a cold start, bun executed slightly slower than pnpm, taking 20.5 seconds. This might be due to the fact that when installing via pnpm, the processor was utilized at 94%, whereas with bun, it was only 25%.

Well, perhaps using the cache will yield much better results.

Hot Start - pnpm

We run the installation script:

> time pnpm install

Lockfile is up to date, resolution step is skipped

Packages: +633

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Progress: resolved 633, reused 633, downloaded 0, added 633, done

dependencies:

+ @prisma/client 5.1.0

+ axios 1.4.0

+ cors 2.8.5

...

Done in 3s

pnpm install 2.92s user 8.96s system 382% cpu 3.102 totalThis time, pnpm completed the task in an incredible 3.1 seconds, reducing the time by almost 5 times!

Hot Start - bun

We execute the installation script:

❯ time bun install

[0.05ms] ".env"

bun install v1.0.0 (822a00c4)

+ @tsconfig/node18@18.2.2

+ @types/cors@2.8.14

+ @types/express@4.17.18

...

639 packages installed [506.00ms]

bun install 0.02s user 0.25s system 50% cpu 0.522 totalWow! That was lightning fast. The installation completed in 0.5 seconds, which is 41 times faster! Truly an impressive result!

We have verified that in the case of a hot start, Bun installs dependencies on the current project 6.2 times faster than pnpm. But why did we conduct these tests, and what do I want to convey with this post?

New bundlers and libraries are great, and it’s exciting to see the industry evolving. However, is this 6.2x improvement essential for my project? Most likely not, as pnpm handles its tasks well. Additionally, my current Dockerfile for dependency installation already leverages layer caching, significantly speeding up the process, even when using npm.

You might argue that Bun is not just an installation tool but also a transpiler and a test runner. But is it worth migrating my project and altering configurations? For small personal projects, this might be a straightforward task. However, in the case of large projects with extensive codebases and outdated library versions, it’s worth reconsidering. In the real world, a slight increase in deployment performance by a few seconds is not always as crucial as it may seem. More significant factors become design patterns, architecture, and code optimization.

On this topic, I recommend checking out Alexey Simonenko’s talk How I Stopped Believing in Technologies.

In Conclusion

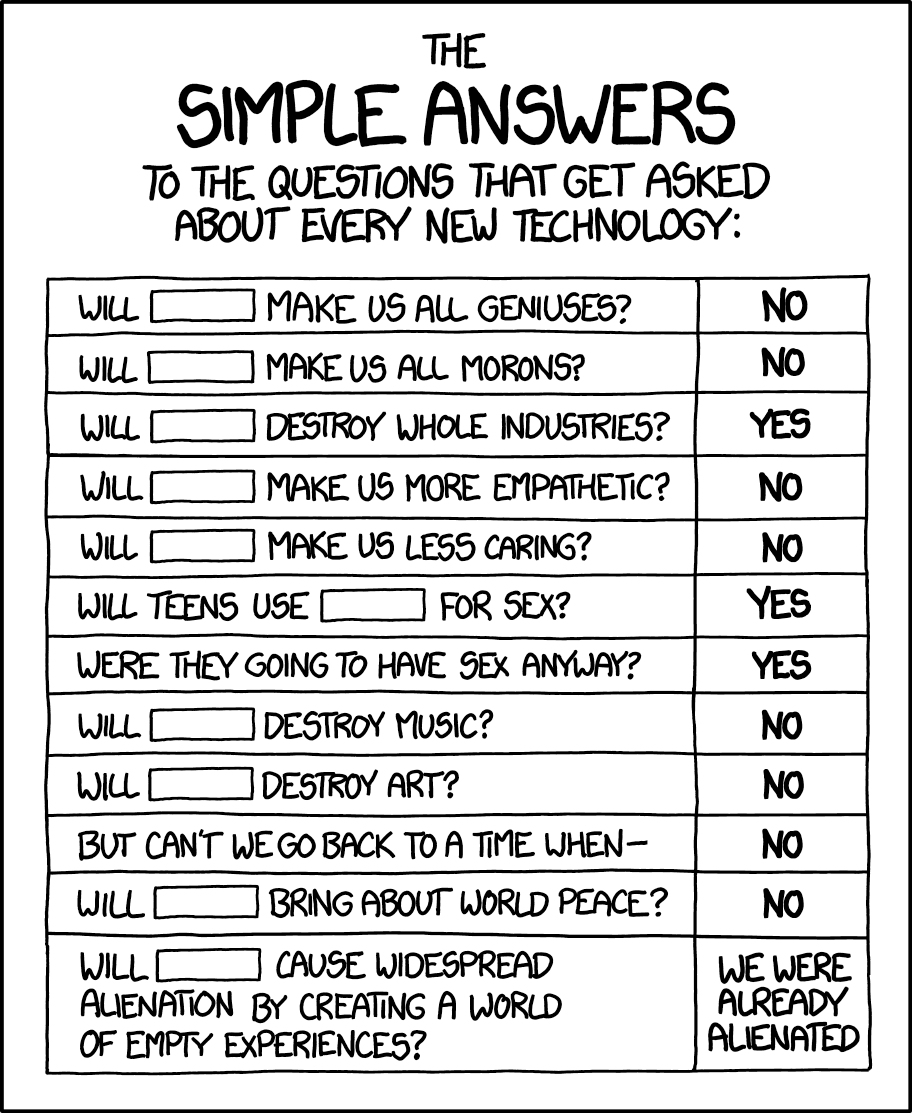

My main point here is that it’s crucial to sensibly assess the need for transitioning to new technologies rather than blindly following trends. Experimenting with the new is valuable, but always questioning whether it’s genuinely necessary and weighing all circumstances is essential. There’s no need to rewrite projects from scratch every year with new frameworks, especially if the current solutions are performing well. Updating libraries and transitioning to major versions should be done thoughtfully. For instance, upgrading from version 16 to 18 of React can introduce exciting new capabilities.

There’s a well-known case where a project with over a decade of history was decided to be rewritten from the ground up, yet the old project continued to function in parallel! This resulted in having two extensive codebases that became challenging to maintain, particularly after the departure of those who initiated the new version.

In conclusion, I want to emphasize that this post isn’t just about Bun; it’s also about reflecting on the never-ending race for new technologies. Experimenting with fresh solutions is beneficial, but before doing so, it’s essential to ask whether it’s genuinely necessary and to carefully weigh all decisions.